Dave Crader explains what a long-tail keyword is and how it can be used to bring more traffic to your website. He'll review the many benefits of long tail keywords and explain why Google engineer Matt Cutts recommends new bloggers target them. If you have any questions or feedback please leave a comment below.

Hi, I am Dave Crader Web Marketing Strategist here at Evolve Creative Group. Today we are going to be going over long tail keywords, what they are and why they are so powerful.

A long tail keyword can be defined as any keyword phrase longer than three words. Long tail keywords are very specific. Something like this would be considered a long tail keyword: "how to make a maple bacon milkshake?" it is much more specific than something like "bacon milkshake." In fact, over 70% of the keyword searches on Google are these long tail keyword phrases.

When you are optimizing for long tail keywords you can create blog post and web pages targeted specifically to these keyword phrases. If someone is searching for "bacon milkshake" it could be anything. They could want to see pictures of a bacon milkshake, they could want to get recipes on how to make it, they could want to see a video, you really don't know. But, with something like this it is very specific. If you make a blog post specific to that keyword then those visitors are going to stay on your site longer they are going to complete your goals and convert more. So, long tail keywords are very important, and they are less competitive because there are not as many websites that are making content for these long tails. There is a lot of websites making content for these guys.

Looking at it from Google's perspective, there is not enough web pages on the Internet currently, to cover every single long tail keyword. There are millions of these keywords that are undiscovered. So, to appease Google and to get rewarded by Google, if you make content that is going to help Google out, they are going to help you out. They are going to rank this content higher, because it is more relevant. They want relevant search results. They want to be able to provide relevant search results to their users. So, you help them and they are going to help you.

Looking at it from a different perspective, Google is making money from the content that you create. When you create content that is specific to its searchers, the searchers are having a better user experience and they are going to remember that great user experience and they are going to use Google more often. Matt Cutts, Google's distinguished engineer actually recommends that you start off all new blogs targeting long tail keywords. Over time, eventually, you will be able to pull from these shorter tail keywords but, to start off, you need to target the keywords that don't have a million sites already trying to pull from them.

In summary, a long tail keyword is any keyword longer than three words. It is a very specific search query that you can provide a very specific answer to. Users are going to stay on your website longer and they are going to convert more.

Penalties are bad. They’ll wipe your online income, get you fired and generally just make you really REALLY sad. You can, and certainly should, memorize Google’s Webmaster Guidelines, but just know that your website isn’t ever really completely safe. It’s Google’s engine and they can change the rules whenever they want to – overnight and without notice. This is not a democracy. The company will do whatever it takes to adhere to its mission statement:

“To organize the world’s information and make it universally accessible and useful.”

The only way to protect your website from Google’s penalties and filters is to think like a Google engineer. If you’re doing something to market your website that you wouldn’t feel comfortable telling a team of Google engineers about, you probably shouldn’t be doing it.

So, how do Google engineers think you ask? Well, to start, they don’t like you manipulating their search results. Manipulation can be defined by this one simple sentence:

“Any action performed to your website with the intent of raising your non-paid search engine rankings in an unnatural way.”

This usually refers to things like:

The problem with this definition is its broadness. Let’s say, for example, you’ve recently hired an SEO Practitioner to improve your website’s traffic from search engines. The SEO recommends you remove duplicate content, improve your site speed and write unique and relevant Meta titles and Meta descriptions for each page of your site. They are recommending these things to improve your non-paid search engine rankings, but their intent is not to manipulate search engine results. Their intent is to help Google’s spiders find and naturally index your content and create a great user experience. Help Google’s spiders find and index your content = good. Tell Google’s spiders that your content is better than others = bad. Matt Cutts, Google’s distinguished engineer, does a decent job explaining this in his video:

Enough introduction; let's get on with the post already! Before I start I’d like to mention that this post was inspired by a great question we received from a client the other day. Let me explain:

We noticed that several pages of the client’s site had been copied and posted on another website, so we recommended the client reach out and attempt to get the copied content removed to avoid duplicate content filters from Google. Notice I said ‘duplicate content filters’ not ‘duplicate content penalties’ – more on that in a minute. The client performed a Google search on duplicate content penalties and came across this article on Google’s Webmaster Central Blog which rightfully caused a bit of confusion. Basically, the post says “duplicate content really isn’t an issue unless it is being used in a way to manipulate search engines.” After reading the post the client asked us “how important is it that we get the duplicate content removed if we’re not intentionally manipulating search engines?” That’s a great question that is impossible to answer without going over the concept of Google’s penalties and filters.

In general, there are filters and there are penalties that Google uses to organize search results. A filter can occur without any human intervention, but a penalty usually only occurs when a Google employee physically reviews your website. In the following sections we’ll be going over both.

One type of Google filter is activated when multiple versions of the exact same webpage are published on the Internet. This is very common in news where one article is often copied word for word onto news aggregators such as Yahooo News and Fark. For example, The Washington Post recently published an article titled:

Presidential debate: 7 questions that should be asked … and probably won’t

Copy that title and paste it into Google with quotes around it to find that exact sentence elsewhere on the Internet.

Notice how Google omits (filters) websites from search results that appear to be duplicates of the original. They’re doing this because they want the original article to get credit. They’re not placing a penalty on the omitted websites. They’re just not showing them. In this instance Google chose the correct original source, but that’s not always the case. Google isn’t perfect and it doesn’t have a brain. It could choose the incorrect original source and filter your website on accident. This reasoning is what led us to recommend the removal of duplicate content for our client.

Another type of Google filter is activated when Google releases one of its ‘Quality Updates’ such as Penguin or Panda. These quality updates are usually released when Google engineers identify patterns (or ‘footprints’) in their algorithm that are manipulating search results. These updates are usually on-going and released randomly over the course of several months and years.

Back in February 2011 Google tweaked its algorithm with the first version of the Panda quality update. This update was targeted at “low-quality sites—sites which are low value add for users, copy content from other websites or sites that are just not very useful.” according to Google’s press release. This is a fairly broad description that was later clarified in a Q & A session with Google’s top engineers. You might also be interested in SEOmoz’s Analysis of Winners vs Losers of the Panda Update. Basically, this update went after duplicate content that doesn’t add anything original to the Internet. It’s been updated to version 3.9.2 since its original release date but the premise remains the same. If you’re going to write something for the Internet make sure you’re adding your own unique opinion to the article and not just rewriting other articles that have already been published. This update is fairly old now so I’m not going to go too deep into it (because I don’t have anything original to say – it’s been out for over a year and there are thousands of articles covering it already), but if you have a question please feel free to leave a comment.

Just recently in April 2012 Google tweaked its algorithm once again with the first version of the Penguin quality update. This update was targeted at websites using “black hat webspam techniques” according to Google’s press release. Again, Google is extremely broad in its description of the update and doesn’t really explain what changed, but thanks to conclusive studies we can safely confirm that the first penguin update was mainly about backlink anchor text (or link text) manipulation. Before penguin, the anchor text used when linking to an external website was a significantly overpowered ranking factor for that external website. This caused many SEO Practitioners to build backlinks with anchor texts stuffed with shopping related keywords like ‘buy yoga towel’ or ‘plumbers in Akron Ohio’ so their websites could rank higher on Google for those keywords. Let’s look at an example:

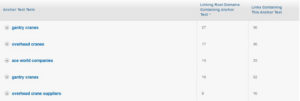

Ace World Companies is a manufacturer of Overhead Cranes, Hoists and more. According to opensiteexplorer.org their website, aceworldcompanies.com, has 170 backlinks pointed to it. If you navigate to ajaxtutorials.com/ajax-tutorials/ajax-comment-form-in-vb-net (we’re not linking to this page because Google’s filters could mistakenly consider us part of the link spam network if we did) you’ll notice there is a link to AceWorldCompanies.com in the comment section.

![]()

This is exactly the type of manipulative backlink that Google targeted with its Penguin update. The link is unnatural because it is not helpful to readers of the Ajax article. It’s also manipulative because it’s using a keyword stuffed anchor text of ‘overhead cranes.’ Most webmasters link to other websites using anchor texts like ‘click here,’ ‘Company Name’ and ‘www.CompanyName.com.’ If a website has 17 backlinks all with ‘overhead cranes’ as the anchor text, like AceWorldCompanies.com, it’s going to be fairly easy for Google’s algorithm to pick up on it and filter it with Penguin. I can’t say for sure if aceworldcompanies.com was filtered with Penguin since I don’t have access to their Google Analytics, but with a backlink portfolio like this:

It’s probably a yes. Sorry for outing you Ace World Companies, but you were probably filtered by now anyway.

Manual penalties used to be a rarity because Google did not want to add personal bias to its search results, but with the influx of spam these last few years, Google has been forced to be more hands on. Two of the most common manual penalties were those placed on Overstock.com and JCPenny.com (I’m writing in past tense because the penalties have since been lifted). The Overstock penalty story broke on The Wall Street Journal here and the JCPenny penalty story broke on The New York Times here. Basically, JCPenny and Overstock were buying links that passed PageRank which is a clear violation of Google’s Webmaster Guidelines. A backlink is supposed to be a third-party vote that Google can rely on to rank websites higher in search results.

If you buy links it’s not giving Google that third-party vote. It’s the same idea as the anchor text manipulation filter in Penguin. Remember, Google does not want you to tell them where your content should rank. They want to leave that up to a mathematical equation.

Paid links can be extremely hard to detect algorithmically so Google usually has to rely on news stories and user submitted spam reports to find them. Had The Wall Street Journal and The New York Times not outed the two companies they probably would have been safe, but when the stories broke they made Google look bad so Google had to penalize them. Google couldn’t penalize them for long though because users were still searching for ‘JCpenny’ and ‘Overstock’ on Google. If a user searches for JCPenny and Google does not return a link to the JCPenny website than that user is going to have a bad experience and is going to use other search engines like Bing or Yahoo to find what they need. Funny how that works isn’t it?

In summary, the criteria Google uses to determine penalties changes often. In fact, while writing this post I realized that Google updated its link scheme policy on 10/2/12 to include this fun little tidbit in bold:

“Buying or selling links that pass PageRank. This includes exchanging money for links, or posts that contain links; exchanging goods or services for links; or sending someone a “free” product in exchange for them writing about it and including a link.”

I’d be willing to bet that nearly 90-100% of the websites on the Internet, including Google themselves, have and still are violating Google’s broad policies. Rather than stress out about it, just ask yourself these two simple questions:

1. Are you making Google look bad?

2. Would you feel comfortable telling a Google engineer what you’re doing to market your website?

Learn How-To Recover From Google Penalties and Filters in our next post.

So what is keyword intent and why is it so important? Keyword intent simply refers to the intent of the searcher. The intent of the ‘how to soundproof a room’ searcher is to find a guide or video explaining how to soundproof a room. If someone landed on the company’s homepage after searching ‘how to soundproof a room’ they would not get that guide and would have a bad experience. That’s why the company chose keywords with 10,000 less searches for the homepage. Although they were searched less, they were more relevant to the content on the homepage. SEO is all about relevancy!

Keyword research isn’t necessarily hard, but there’s definitely much more to it than what meets the eye. Sure, you can read a post like this and get a pretty good idea of how it should be done, but you won’t fully understand one of most important aspects of keyword research until you’ve grasped the concept of keyword intent.

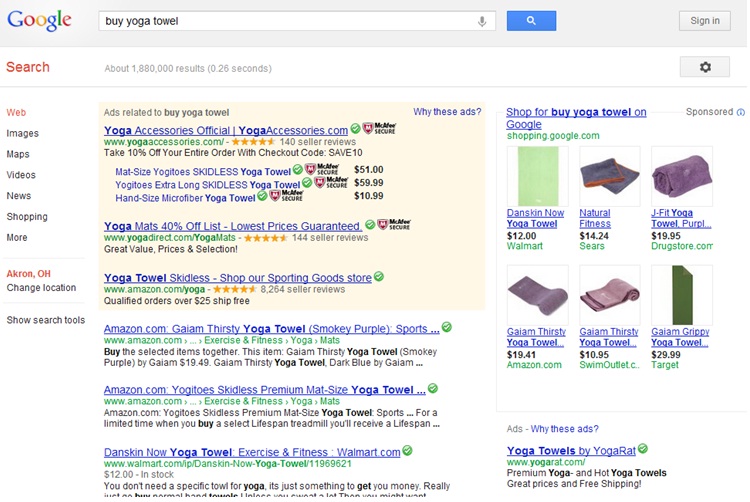

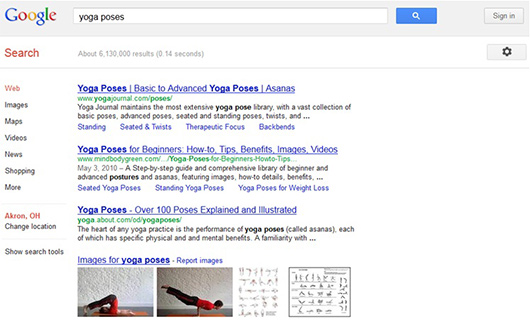

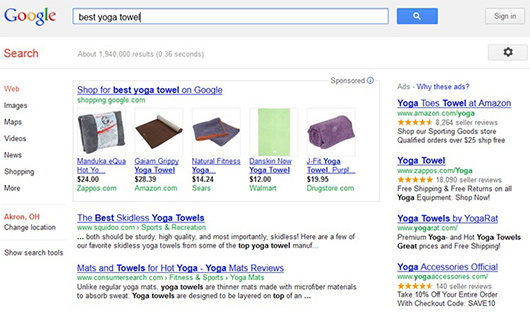

For example, someone searching ‘Buy Yoga Towel’ on Google has the intent of buying a yoga towel. Someone searching ‘yoga poses’ has the intent of viewing free yoga poses and pictures. And someone searching ‘best yoga towel’ has the intent of reading an article comparing and contrasting different yoga towels. We can separate these into three groups: Shopping Keywords, Browsing Keywords and Comparison Keywords. Notice the difference between search results?

This search returns a page filled with links to places where you can buy a yoga towel. There are AdWords ads and Google Shopping Ads all over the place because the intent of the searcher is so very product focused. Also, notice that yoga towel product pages on Amazon and Wal-Mart occupy the first three positions of the non-paid search results.

This search is more informational in nature so you don’t see a lot of advertisers on AdWords or Google Shopping bidding for it. Browsing Keywords like these don’t often lead to conversions, but they shouldn’t be completely ignored because they can be great topics for your company blog. Although they may not convert into sales, they could attract links and social shares which will help bring more traffic to your site for the more valuable shopping-related keywords. Notice that the first few results are for information related sites rather than e-commerce stores like Wal-Mart and Amazon.

Comparison related keywords can go either way. Some people search them and assume whatever is at the top is the ‘best’ while others want to read reviews and make comparisons. Notice that there are AdWords and Google shopping ads in the results, but the first few non-paid results are NOT product pages. They are links to reviews and comparisons about the different products on the market. Comparison keywords are great article and blog post topics, but you’ll have to convince readers that your product is the best if you’d like to convert these searchers into sales.

If you’re using our Pay Per Click (PPC) Management Service you’ll find that we prefer to target keywords in the shopping and comparison category to help optimize your PPC budget. Browsing Keywords do convert on occasion, but we’d prefer to save them for a Social Media or SEO Campaign instead.

by Dave Crader

Ever had your content stolen? It recently happened to us and we certainly did not appreciate it. We spend hours writing well-researched original articles because that’s what our readers deserve. You won’t find any rewriting here. The downside to this, as we recently discovered, is becoming a target for copyright infringement. Luckily, we were able to get the copied content removed in this case, but I doubt it will always be so easy. In this post we’ll go over a few options you have if this ever happens to you, and we’ll help you identify duplicate content on your own site that you may not even know existed.

There are a lot of misconceptions about Google’s duplicate content penalty, so let me explain the basics before we dive in.

A lot of duplicate content is created by scraper websites known as ‘autoblogs.’ Autoblogs are set-up by low-life scumbags who don’t have the creative skill to write their own material. These autoblogs are configured to scrape your website and steal your content almost instantly after you’ve posted it. This confuses search engine spiders because they don’t know which site is the source of the original content. If the spiders crawl the autoblog before crawling your website they will think the autoblog is the original source and you are the copier. If the spiders crawl your content before crawling the autoblog, you should be safe from penalties. It’s not as clean cut as that, but that’s the general idea. Either way, it’s worth your time to proactively pursue content thieves just to be safe.

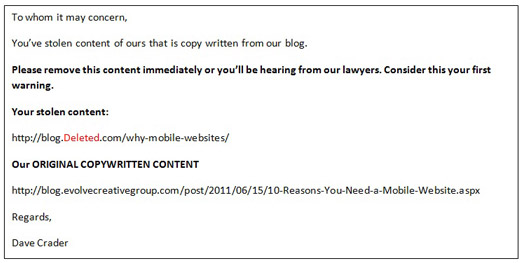

I was doing some research for our new mobile app service page and stumbled across an article that looked strikingly similar to David’s stellar mobile website blog post from back in June. I ran David’s article through copyscape.com, a free online plagiarism checker, and was surprised to find not just one infringer, but two. I immediately sent a friendly yet direct e-mail to the website owners in hopes of getting the copied content removed.

This is one of the e-mails I received back:

I won’t expose this individual since he complied, but it’s interesting that he didn’t know about basic copyright infringement laws. When a tangible idea is shared with at least one other person it becomes protected under U.S. copyright law. A tangible idea can be defined as any idea that is spoken or written. It really does not matter if ‘Copyright ©’ appears next to the idea or not. ‘Copyright ©’ is just a way to warn people that the idea is protected. Like any law, there are various odd exceptions that come into play, but I’ll leave those to the U.S. Copyright Office to explain. The other infringing website owner did not respond to my e-mail, but the page was also removed promptly.

I’ve never had to go straight to the hosting provider, but Google recommends it on its duplicate content help page. I didn’t know hosting providers were required to accommodate such requests, but if they are, it seems like this would be a very effective method.

You can always have your lawyers write up an official cease and desist letter if you’d like, but this is usually pretty expensive. I’d go with option one or two before heading down this path.

If you can’t get a hold of anyone you can always file a request for Google to remove the infringing page from its search results. The copied content will remain, but no one will be able to find it in search results. This will also remove the risk of any duplicate content penalties Google may have assigned to your website.

On-site duplicate content is very common. It’s arguably more dangerous than off-site duplication because precious link juice is being spread thin in multiple directions. Off-site duplication doesn’t split link juice, it just tells search engines not to give any juice to the copied version.

For example, let’s take a look at Gojo.com. Gojo’s® homepage is splitting link juice in 6 directions causing canonicalization issues. We know this because each of the following URLs displays the exact same homepage content.

• http://gojo.com/

• http://www.gojo.com/default.asp

• http://www.gojo.com/default.aspx

• http://gojo.com/default.aspx

• http://gojo.com/default.asp

• http://www.gojo.com/

Google gets confused by this because it doesn’t know which URL is the primary version that Gojo would like to rank in search. If Google doesn’t know which version is the primary, it makes a guess based on backlinks and other factors.

The split has also caused a link equity problem. For example,

http://gojo.com/ - has 20 backlinks.

www.gojo.com - has 891 backlinks.

Google assumes www.gojo.com is the primary version because of the backlinks, but that doesn’t necessarily fix the problem. The company is still missing out on some precious link juice from the 20 people who linked to http://gojo.com instead of www.gojo.com. All of these problems can be easily fixed with a 301 redirect or a rel=”canonical” attribute specifying one primary version of the URL.

A rel=”canonical” attribute will only redirect search engine spiders, not users.

A 301 redirect will redirect both search engine spiders and users.

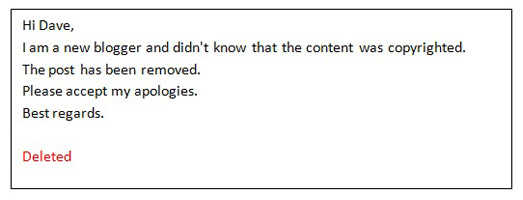

Looking at the Title tags of a website is an easy way to detect duplicate content issues. You can find duplicate title tags in the Diagnostic section of Google webmaster tools. If you don’t have Google Webmaster Tools set up you can use Xenu Link Sleuth instead (Mac users will need to use Screaming Frog).

Two pages that have the same title tag often have the same content as well. To fix this, you should apply a 301 or rel=”canonical” attribute to specify a primary version. If one page has accrued more backlinks than the other, I’d recommend choosing the page with backlinks as the primary.

A lot of webmasters offer visitors a ‘print friendly view’ of their website’s pages. This is great for users, but bad for search engines because two pages with the exact same content will exist on the website. The rel=”canonical” attribute can be used in this situation because it will eliminate the duplicate content issue while still allowing users to access the print friendly page. If a 301 is used the user would be redirected back to the same page that he/she is currently on. The rel=”canonical” attribute was actually invented for this very reason. Here at Evolve, we avoid this issue completely by using print friendly style sheets and CSS. These style sheets use a different set of css properties for when a browser attempts to print a page. This creates a print friendly version of the page without needing a separate URL. Smashing Magazine has a great tutorial for accomplishing this.

Search engines hate duplicate content because they don’t know which version to show in search results. They’re already dealing with over a trillion web pages - why make their job even harder? If you think you may have some duplicate content issues, just give us a call at 234-571-1943 for some help.

With a new year comes exciting new possibilities. These often come in the form of resolutions, but here at Evolve, we took a stab at predicting how several of the key aspects of the Web world might change in 2012. Take a look at our thoughts on what the next big thing might be. Also, check out the reference links for even more insight into the world of possibilities for 2012.

Google will continue to own this space. Their blended results (local search, video, social, news and images) will continue to change throughout the course of the year, and it will grow as a local search tool. More small- to mid-size companies will recognize the importance and value of paying for professional SEO support.

Small businesses will try their hand at this, but struggle without some third-party support. Medium- to large-size businesses, however, will start paying attention to social metrics and succeed. New social start-ups will emerge, but we'll have to wait and see what the next big thing is going to be. Facebook, the most popular social network of 2011, will continue to dominate the social space with more acquisitions, enhancements and older users flocking to this platform. Some believe Twitter will be used for news feeds more than conversation, and will start to die off. Others who disagree note the many users that complain Facebook is clogged and annoying, while Twitter is quick and easy. Twitter will need to be watched closely.

This will continue to be one the best forms of up-sell, cross-sell and lead generation marketing tactics in 2012. The big change will be in mobile email marketing, as it's growing rapidly. Email marketers will need to pay closer attention to mobile going forward, and should keep it in mind when they design and strategize for this platform.

PPC has historically been owned by Google, who recently blurred the lines between what is a "paid ad" and what's "not." Our usability testing has revealed many users can't tell the difference. In either case, Facebook PPC will challenge the Google giant in 2012, and will become more popular for companies that decide it makes sense to try out.

Many marketers still have no idea what this is and what it does, but the industry is hoping to see more marketers budgeting for this. With more technology and interaction being integrated into websites, the importance and popularity of this service will surely grow. We may still be a couple of years away though.

Ecommerce will continue to grow with more product-driven business models. Mobile shopping will emerge as a viable platform, and social shopping will also grow for consumer markets.

We're already seeing a shift in analytics with mobile browsing gaining traction, but it'll continue to be a hard sell to upper-management. This should be the year for mobile, but whether that holds true is still to be determined.

This one's a no-brainer. Apps have become the hottest new trend, and will continue their dominance this coming year. Costs will determine who can play in this space, and the target audience will determine what platform to invest in. iPhone? iPad? Android? All of the above? Regardless of the choices made, the staggering popularity and capability of this service will provide plenty of fuel for 2012.

With the growth in sales over the holidays, tablets will be a viable platform for Web development this coming year. Marketers and designers will need to start paying closer to attention to their users and how their websites perform, look and interact on theses mobile browsing devices.

Firefox will lose some additional ground to Chrome, which will grow in popularity among younger demographics. Safari will continue to hang on with the Apple OS backing it, and IE will gain more traction with the introduction of the new Windows 8 operating system. We may even see the death of IE7 (at least our industry hopes so).

Each year the amount of video viewed online continues to grow. As mobile devices get savvier with serving up optimized videos, we'll start to see videos on almost every website.

More than 85 percent of Web users are using search engines to find the products or services they're looking for, but guess how many are using "local search terms?" You'll be surprised by the number.

According to a TMP Directional Marketing Local Search Usage study, 31 percent of consumers are now using search engines as their primary resource for local business information. Combined with IYPs (Internet Yellow Pages) and local search sites such as Google Maps or Yahoo Local, the online channel now accounts for 61 percent of all primary sources of local business information.

While local search isn't an entirely brand new technology, more users are starting to embrace it, and are searching with local terms in their keyword search both on desktops and mobile devices. Take a look at your analytics, and I bet you'll see some of your referred visitors are from local search. If not, then it's time to get going.

Why not? It's (mostly) free, after all, and within minutes you can sign up, add your business listing and be on your way to getting listed. The approval process for major search engines like Google vary, but it's worth the wait.

If you have an older website with some Web history, your site may have already been indexed by local search engines, but don't trust search engines to get your listing correct. You can "claim" your business listing and add additional information about your products, services, hours, reviews and even photos. It's like getting a FREE Yellow Pages ad.

Mary Bowling of the ClickZ network published a great article informing small businesses about some great (mostly free) tools for checking your listings. The best part is it's free with no sign up required. What a concept.

Local search is growing rapidly, and it's here to stay. This is definitely a trend that can significantly help the growth of your business locally, and can provide you with opportunities to reach every customer located within the zip code areas you service at little or no cost. So what are you waiting for? Get local today.

Would you like to learn more about getting your business listed in local search engines? Give us a call today, and we'll walk you through it.