Penalties are bad. They’ll wipe your online income, get you fired and generally just make you really REALLY sad. You can, and certainly should, memorize Google’s Webmaster Guidelines, but just know that your website isn’t ever really completely safe. It’s Google’s engine and they can change the rules whenever they want to – overnight and without notice. This is not a democracy. The company will do whatever it takes to adhere to its mission statement:

“To organize the world’s information and make it universally accessible and useful.”

The only way to protect your website from Google’s penalties and filters is to think like a Google engineer. If you’re doing something to market your website that you wouldn’t feel comfortable telling a team of Google engineers about, you probably shouldn’t be doing it.

So, how do Google engineers think you ask? Well, to start, they don’t like you manipulating their search results. Manipulation can be defined by this one simple sentence:

“Any action performed to your website with the intent of raising your non-paid search engine rankings in an unnatural way.”

This usually refers to things like:

- Keyword stuffing

- Cloaking

- Link exchanges

- and more

The problem with this definition is its broadness. Let’s say, for example, you’ve recently hired an SEO Practitioner to improve your website’s traffic from search engines. The SEO recommends you remove duplicate content, improve your site speed and write unique and relevant Meta titles and Meta descriptions for each page of your site. They are recommending these things to improve your non-paid search engine rankings, but their intent is not to manipulate search engine results. Their intent is to help Google’s spiders find and naturally index your content and create a great user experience. Help Google’s spiders find and index your content = good. Tell Google’s spiders that your content is better than others = bad. Matt Cutts, Google’s distinguished engineer, does a decent job explaining this in his video:

Does Google Consider SEO to be Spam?

Enough introduction; let's get on with the post already! Before I start I’d like to mention that this post was inspired by a great question we received from a client the other day. Let me explain:

We noticed that several pages of the client’s site had been copied and posted on another website, so we recommended the client reach out and attempt to get the copied content removed to avoid duplicate content filters from Google. Notice I said ‘duplicate content filters’ not ‘duplicate content penalties’ – more on that in a minute. The client performed a Google search on duplicate content penalties and came across this article on Google’s Webmaster Central Blog which rightfully caused a bit of confusion. Basically, the post says “duplicate content really isn’t an issue unless it is being used in a way to manipulate search engines.” After reading the post the client asked us “how important is it that we get the duplicate content removed if we’re not intentionally manipulating search engines?” That’s a great question that is impossible to answer without going over the concept of Google’s penalties and filters.

In general, there are filters and there are penalties that Google uses to organize search results. A filter can occur without any human intervention, but a penalty usually only occurs when a Google employee physically reviews your website. In the following sections we’ll be going over both.

Google Filters

One type of Google filter is activated when multiple versions of the exact same webpage are published on the Internet. This is very common in news where one article is often copied word for word onto news aggregators such as Yahooo News and Fark. For example, The Washington Post recently published an article titled:

Presidential debate: 7 questions that should be asked … and probably won’t

Copy that title and paste it into Google with quotes around it to find that exact sentence elsewhere on the Internet.

Notice how Google omits (filters) websites from search results that appear to be duplicates of the original. They’re doing this because they want the original article to get credit. They’re not placing a penalty on the omitted websites. They’re just not showing them. In this instance Google chose the correct original source, but that’s not always the case. Google isn’t perfect and it doesn’t have a brain. It could choose the incorrect original source and filter your website on accident. This reasoning is what led us to recommend the removal of duplicate content for our client.

Another type of Google filter is activated when Google releases one of its ‘Quality Updates’ such as Penguin or Panda. These quality updates are usually released when Google engineers identify patterns (or ‘footprints’) in their algorithm that are manipulating search results. These updates are usually on-going and released randomly over the course of several months and years.

Panda

Back in February 2011 Google tweaked its algorithm with the first version of the Panda quality update. This update was targeted at “low-quality sites—sites which are low value add for users, copy content from other websites or sites that are just not very useful.” according to Google’s press release. This is a fairly broad description that was later clarified in a Q & A session with Google’s top engineers. You might also be interested in SEOmoz’s Analysis of Winners vs Losers of the Panda Update. Basically, this update went after duplicate content that doesn’t add anything original to the Internet. It’s been updated to version 3.9.2 since its original release date but the premise remains the same. If you’re going to write something for the Internet make sure you’re adding your own unique opinion to the article and not just rewriting other articles that have already been published. This update is fairly old now so I’m not going to go too deep into it (because I don’t have anything original to say – it’s been out for over a year and there are thousands of articles covering it already), but if you have a question please feel free to leave a comment.

Penguin

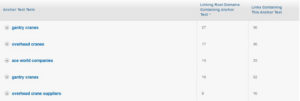

Just recently in April 2012 Google tweaked its algorithm once again with the first version of the Penguin quality update. This update was targeted at websites using “black hat webspam techniques” according to Google’s press release. Again, Google is extremely broad in its description of the update and doesn’t really explain what changed, but thanks to conclusive studies we can safely confirm that the first penguin update was mainly about backlink anchor text (or link text) manipulation. Before penguin, the anchor text used when linking to an external website was a significantly overpowered ranking factor for that external website. This caused many SEO Practitioners to build backlinks with anchor texts stuffed with shopping related keywords like ‘buy yoga towel’ or ‘plumbers in Akron Ohio’ so their websites could rank higher on Google for those keywords. Let’s look at an example:

Ace World Companies is a manufacturer of Overhead Cranes, Hoists and more. According to opensiteexplorer.org their website, aceworldcompanies.com, has 170 backlinks pointed to it. If you navigate to ajaxtutorials.com/ajax-tutorials/ajax-comment-form-in-vb-net (we’re not linking to this page because Google’s filters could mistakenly consider us part of the link spam network if we did) you’ll notice there is a link to AceWorldCompanies.com in the comment section.

![]()

This is exactly the type of manipulative backlink that Google targeted with its Penguin update. The link is unnatural because it is not helpful to readers of the Ajax article. It’s also manipulative because it’s using a keyword stuffed anchor text of ‘overhead cranes.’ Most webmasters link to other websites using anchor texts like ‘click here,’ ‘Company Name’ and ‘www.CompanyName.com.’ If a website has 17 backlinks all with ‘overhead cranes’ as the anchor text, like AceWorldCompanies.com, it’s going to be fairly easy for Google’s algorithm to pick up on it and filter it with Penguin. I can’t say for sure if aceworldcompanies.com was filtered with Penguin since I don’t have access to their Google Analytics, but with a backlink portfolio like this:

It’s probably a yes. Sorry for outing you Ace World Companies, but you were probably filtered by now anyway.

Manual Penalties

Manual penalties used to be a rarity because Google did not want to add personal bias to its search results, but with the influx of spam these last few years, Google has been forced to be more hands on. Two of the most common manual penalties were those placed on Overstock.com and JCPenny.com (I’m writing in past tense because the penalties have since been lifted). The Overstock penalty story broke on The Wall Street Journal here and the JCPenny penalty story broke on The New York Times here. Basically, JCPenny and Overstock were buying links that passed PageRank which is a clear violation of Google’s Webmaster Guidelines. A backlink is supposed to be a third-party vote that Google can rely on to rank websites higher in search results.

If you buy links it’s not giving Google that third-party vote. It’s the same idea as the anchor text manipulation filter in Penguin. Remember, Google does not want you to tell them where your content should rank. They want to leave that up to a mathematical equation.

Paid links can be extremely hard to detect algorithmically so Google usually has to rely on news stories and user submitted spam reports to find them. Had The Wall Street Journal and The New York Times not outed the two companies they probably would have been safe, but when the stories broke they made Google look bad so Google had to penalize them. Google couldn’t penalize them for long though because users were still searching for ‘JCpenny’ and ‘Overstock’ on Google. If a user searches for JCPenny and Google does not return a link to the JCPenny website than that user is going to have a bad experience and is going to use other search engines like Bing or Yahoo to find what they need. Funny how that works isn’t it?

Summary

In summary, the criteria Google uses to determine penalties changes often. In fact, while writing this post I realized that Google updated its link scheme policy on 10/2/12 to include this fun little tidbit in bold:

“Buying or selling links that pass PageRank. This includes exchanging money for links, or posts that contain links; exchanging goods or services for links; or sending someone a “free” product in exchange for them writing about it and including a link.”

I’d be willing to bet that nearly 90-100% of the websites on the Internet, including Google themselves, have and still are violating Google’s broad policies. Rather than stress out about it, just ask yourself these two simple questions:

1. Are you making Google look bad?

2. Would you feel comfortable telling a Google engineer what you’re doing to market your website?

Learn How-To Recover From Google Penalties and Filters in our next post.